As the National Crime Agency launches a campaign warning the increase in use of AI for sextortion. How do we protect our children when the threats are invisible?

The National Crime Agency (NCA) has launched a new awareness campaign highlighting the alarming rise of AI-powered sextortion, where criminals use digitally manipulated images to blackmail victims, often without them ever having shared an intimate photo. With children being targeted at increasing speed, many are left feeling ashamed, isolated, and too afraid to seek help. The campaign urges victims to stay calm, not to pay.

In today’s hyper-connected world, a disturbing form of abuse is spreading fast and silently preying on children and teenagers. Online sexual extortion, commonly known as sextortion, is on the rise, with perpetrators increasingly using artificial intelligence (AI) to exploit young people in terrifying new ways.

According to Ruth Mullineux Morgan, senior policy officer at NSPCC Cymru, “we know that sexual extortion really kind of crushes a young victim’s ability to trust and result in kind of tragic circumstances where we have had cases where we know that young people have taken their own lives. I think it’s something that has a deep impact on mental health. They have talked about turning to self harm to cope and manage, you know, the strength of their feelings.”

The NSPCC has been conducting research on this emerging threat, as Morgan says, “we’ve been working on AI as an emergent risk at NSPCC. And we’ve done a piece of research trying to understand the nature of the risk and make some suggestions about what needs to happen.”

Morgan futher elaborates saying, “it can be used to create a child sexual abuse imagery. That imagery then is being used to extort and manipulate and blackmail children. So I think there’s various harms that children account online. I think AI just adds a lay on top of all of that in terms of making it easier and intensifying it.”

These AI-generated images are often so realistic that victims fear no one will believe they’re not real, as revealed through Childline counseling sessions.

Childline serves as another vital resource. “It’s a resource that’s there for young people if they need to talk, if they’re not ready to report but they want an adult to sort of talk them through the process,” Morgan says. NSPCC also provides “messaging and resources for parents” while conducting research to better understand the nature of sextortion.

A 15-year-old girl told Childline, “A stranger online made fake nudes of me and it looked real. My face, my room. They must have taken photos from my Instagram. I’m scared my parents won’t believe they’re fake.”

Some teens report classmates using AI to create fake pornographic images of girls at their school during a Childline counseling session.

One little girl said, “These boys made fake porn of the girls, including me, and sent them to group chats. School had an assembly, then told us to forget it happened. But I can’t. Everyone thinks they saw me naked.”

While girls have long been seen as the primary targets, a shift is emerging. Boys now account for 68% of sextortion-related counselling sessions where gender was known. Boys are more often targeted for financial gain, while girls tend to face pressure to produce more explicit content.

A 16-year-old boy in a counseling session with NSPCC’s child support said, “This ‘girl’ messaged me on Discord asking what games I liked. She seemed into the same stuff. It felt nice, like someone really got me. I should have known it was too good to be true.”

For boys especially, the scam is often about money. Offenders demand payments, sometimes small, sometimes thousands, as documented in the NSPCC’s case snapshots.

“She said she’d post my pics to all my followers unless I paid. I gave her £20. Now she wants £10 a week,” said a 16-year-old boy.

While others are pressured in relationships under the guise of trust.

A 14-year-old girl to Childline counselors shared her experience saying, “I was dating this boy in the year above. At first he asked for nudes, and I said no. But then he threatened to leak fake ones unless I did sexual favours,”

The NSPCC has implemented several initiatives to combat this growing problem. “Our councillors speak to children every day who are blackmailed and threatened with having nude images of themselves shared online,” said Morgan.

One key tool is Report Remove, which Morgan describes as “developed with the internet watch foundation and it’s a space where children can through the childline website. They can report nude and images of themselves, which have been shared online. And our train councilors will kind of talk them through it, but also those photos can be taken down off the internet so it gives that kind of peace of mind to young people.” Since its pilot in 2019, “the Watch Foundation has removed 1395 images as a result of that.”

The NSPCC has been actively working on policy changes through the Online Safety Act in the UK. “That is a piece of legislation that gives ofcom the powers to regulate the online space and pushes platforms to clamp down on this sort of online offending,” Morgan says.

Morgan identifies three key areas for improvement. First, “enforcing minimum age limits. Most of the social media sites and that children used in the UK do have them and they tend to set the age at 13, and calls for “the government to give new powers to ofcom to hold services accountable to make sure that they enforce these age limits and they have better ways of verifying a child’s age.”

Second, addressing private messaging platforms. “We are really concerned about how much of this is happening on private messaging, like WhatsApp. If the platform says it’s not feasible, then they don’t have to do it.”

Third, improving youth representation. “We feel like there needs to be more of a formal mechanism for that moving forward. So they need to be working to capture really kind of bundal group voices, making sure that we’re kind of taking an intersection approach to getting all children’s voices heard on this.”

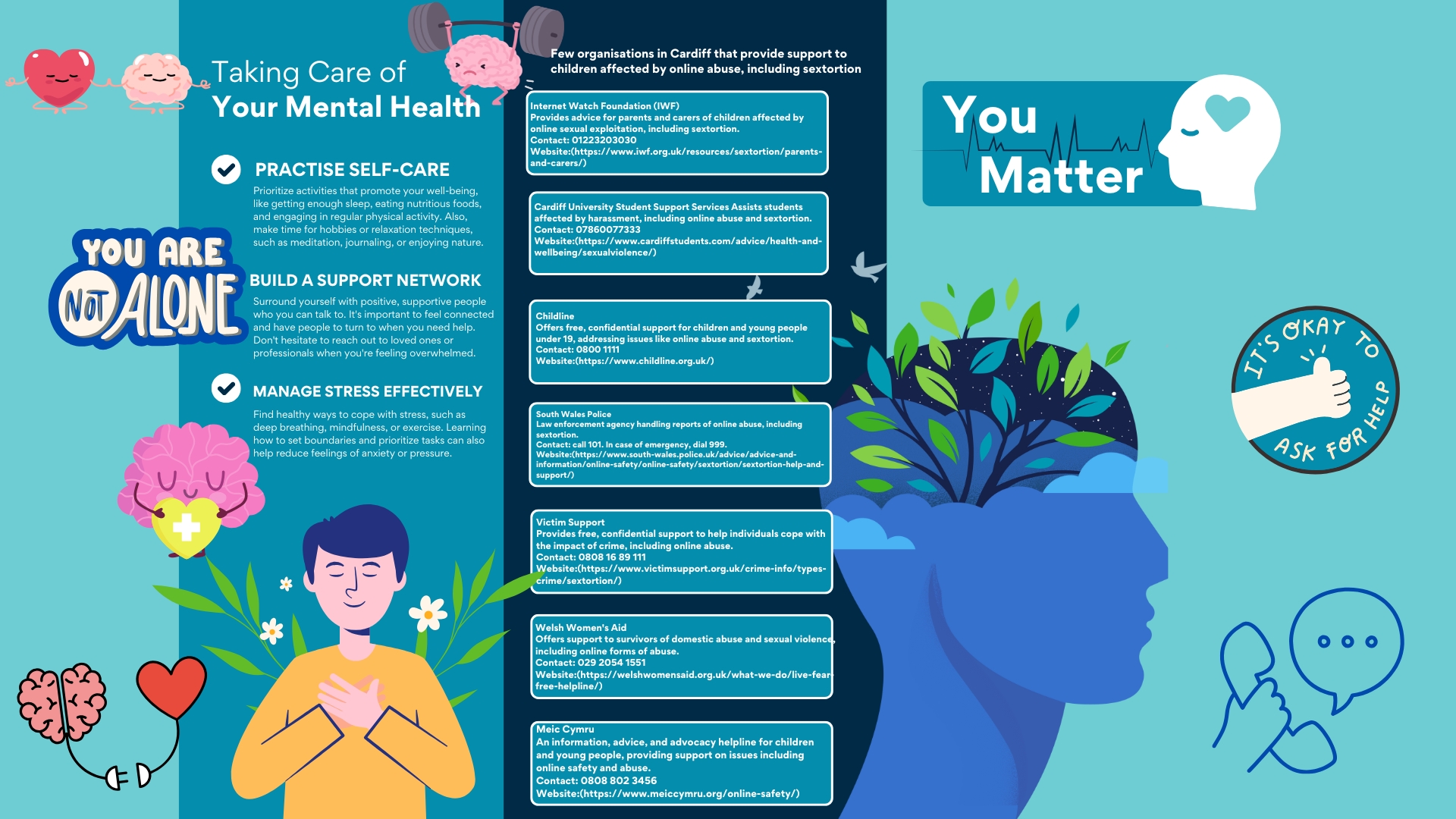

The psychological impact is deep and lasting. Victims often experience fear, shame, anxiety, and thoughts of self-harm, as revealed in the NSPCC’s case snapshots.

Parents are often the first to notice something is wrong, but not always the first people children turn to. Warning signs include withdrawal, secretive device use, mood swings, and unexplained requests for money, as shared with the NSPCC Helpline.

As AI tools grow more powerful, the tactics of those seeking to exploit children evolve too. But we can fight back.

Morgan powerfully says, “To every young person facing sextortion: You are not alone. It’s not your fault. Help is available and you deserve to be safe.”